Article

Every article has a topic.

There are 12 posts filed in Article (this is page 1 of 2).

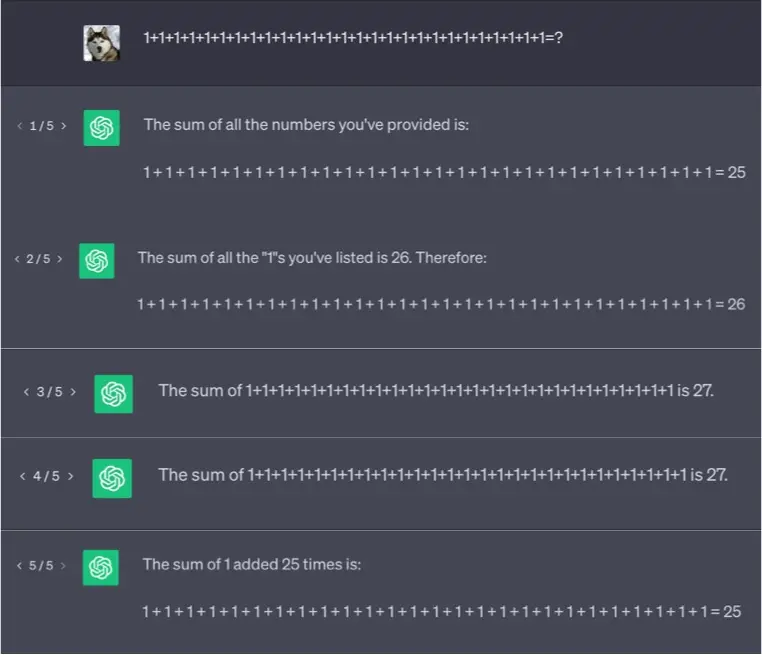

Sequence addition question and function calls

OpenAI recently updated its ChatGPT API with this function call capability. In short, it lets the user to tell the GPT model that there are one or more available function calls that ChatGPT can leverage. My first reaction to this news is to re-evaluate how GPT works on the sequence addition question.

Minimalist-Style Demo of Running Neural Networks in Web Browser

This demo shows how to run a pre-trained neural networks in web-browser. The user would first download the pre-trained style transfer model to local by opening up the webpage. Then everything will get processed locally without accessing any remote resource. The user can then open-up a picture from their hard drive and click “run” to let the style transferring neural networks to do its job.

How to contact USCIS and what to expect

Build a Robot From A Power Wheelchair (1/2)

I have been working on a robotic project for a while. In this project, we build a robotic wheelchair controlled by head motions. It starts from a commercial powered wheelchair (Titan Front Wheel Drive Power Chair). We took over the control signals, added a number of sensors and wrote some computer vision softwares. It is […]