Get a deterministic set of random permutations every day

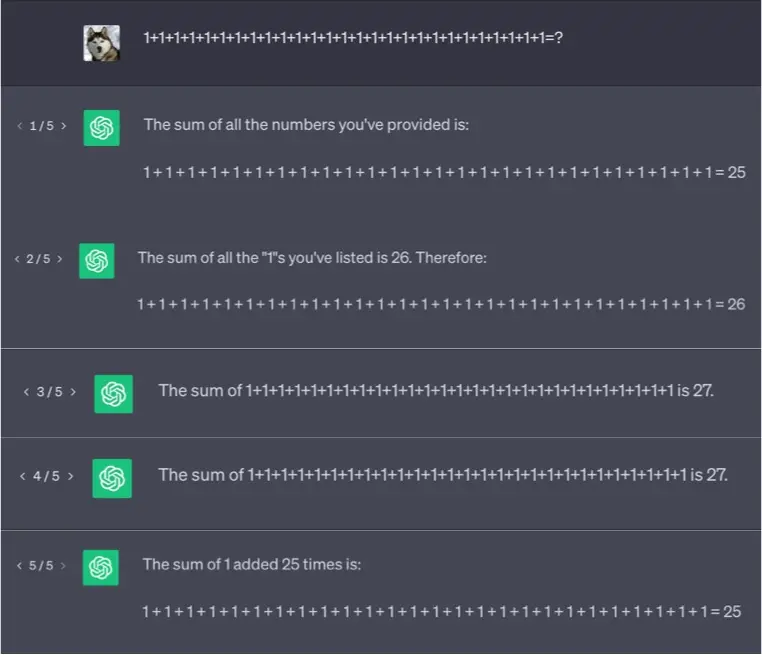

I was looking for a way to generate a deterministic set of random permutations every day. Nowadays, I usually give ChatGPT a try first but didn’t get the answer I want on this one. I ran into this need while making this webpage to show some generated coloring pages in a waterfall layout. To elaborate […]